rebuild postfix virtual.db

adding new info to the postfix virtual file and need to rebuild virtual.db:

postmap virtual

will create the virtual.db file from virtual

good stuff

Looking back over the last year I was thinking about which new application has made the most impact in my day to day work. My first thought was Xen and what it’s done for the servers, but that path had been started down previous to me taking over the reigns as sysadmin. I don’t feel comfortable putting Xen in the category of applications either as it seems to be an ill fit. So the application I’d have to say has made the most impact in the way I handle things and the direction I’ve pushed the company down would be Webistrano. By implementing Webistrano I’ve been better able to setup beta, staging and production environments and the ease of the user interface has allowed me to remove myself from many of instances where I would be deeply involved in a deployment. Had it not been for our switch to Rails and the programmers pointing this tool out I doubt I would have ever entertained running it. Webistrano has also helped me enforce a better “hands off the production servers” policy, which I believe in the end will help us deliver a more reliable product. I’m not using Webistrano just for Rails either, it’s pushing some of our static sites out and updating a combined mod_perl and Rails application we are running. I’ve really just scratched the surface of what can be done with Webistrano (and Capistrano) and I’m looking forward to the point when I can spend more time developing the services a little more, get a better at ruby and author some better deployment addons.

curves

The last six months we’ve been dealing a lot with Rails at work. I wouldn’t say I’m an old school perl guy but it’s what I cut my web teeth on and the language I know best. I have been a perl-ie man for the bulk of the last 10 years. As my current position is now sysadmin I met “the Rails way” with a lot of skepticism and would say I wasn’t exactly a fan of the shift. The reputation for Rails to be a resource hog and putting much of the database handling directly in hands of the developers didn’t sit well with me. Migrations give me the heebie jeebies. Over the last few months I’ve come around a little and can see why the guys like it, the framework itself in abstract is a decently clean idea. From the little experience I’ve managed to have playing with it outside work there are a lot of pieces you don’t have to code up that you would in the traditional perl world. I find myself authoring much less code than I previously would, but I believe I could find perl frameworks that will do most of this ( see Gantry). I would say in the work environment to make a change though, it is very hard to justify getting rid of the old if you are not bringing in the new and it’s a challenge to get people energized doing the same thing over again.

The last six months we’ve been dealing a lot with Rails at work. I wouldn’t say I’m an old school perl guy but it’s what I cut my web teeth on and the language I know best. I have been a perl-ie man for the bulk of the last 10 years. As my current position is now sysadmin I met “the Rails way” with a lot of skepticism and would say I wasn’t exactly a fan of the shift. The reputation for Rails to be a resource hog and putting much of the database handling directly in hands of the developers didn’t sit well with me. Migrations give me the heebie jeebies. Over the last few months I’ve come around a little and can see why the guys like it, the framework itself in abstract is a decently clean idea. From the little experience I’ve managed to have playing with it outside work there are a lot of pieces you don’t have to code up that you would in the traditional perl world. I find myself authoring much less code than I previously would, but I believe I could find perl frameworks that will do most of this ( see Gantry). I would say in the work environment to make a change though, it is very hard to justify getting rid of the old if you are not bringing in the new and it’s a challenge to get people energized doing the same thing over again.

My main point that I wanted to make though is that for the developer Rails is easy, for the sysadmin it is not. When running perl apps you really have a standard, which is mod_perl and Apache. I suppose there are other choices out there, but my guess is that maybe 1 in 100 applications run in an environment different than this. In the Ruby on Rails world this is not so simple. First there is the framework you are going to use, which is kind of silly when we are talking about Ruby on Rails as that is the framework, but there seems to be quite a few notable Ruby frameworks such as Merb, Ruby Waves and Camping, thus it’s possible Rails isn’t the only thing to consider. A glut of frameworks one of the anti-perl flags that gets waved. Next you need to look at which application piece you will be running, Mongrel, Thin, Webrick(not), mod_ruby(Apache, Lighttpd) or one of the other host of up and comers. On top of that there will be your load balancing and static serving piece which you could use Apache, Lighttpd, Ngix and more to make up another equally extensive list of options. If you are going to do an application of any decent size you’re going to need that latter piece in there. In my experience, with mongrel at least, your application server it’s going to make a memory footprint to which your largest mod_perl app will look miniscule. Keeping your static requests apart from your dynamic ones becomes a necessary thing rather than a nice option you could add in later if traffic causes problems. Addins which are gems in the ruby world become an issue, in my time I’ve created and experienced some pretty nasty perl module hell. From what I’ve seen with gems thus far it’s not a big change to that problem or anything revolutionary to fix it. Why the hell does the latter versions of RMagick need Rails 2.0, to me it seems like Image::Magick telling you it needs a certain version of Catalyst? With all of this in mind since I’m using Redhat, it additionally put me back to compiling things by hand as there are no rpms for the base packages, I always have the option of creating them myself I suppose. As Ruby frameworks are fairly new on the scene there hasn’t been enough time to really congeal around front runners for all of these options and at time I feel like I’m dealing with perl back in 2001.

Rails is nice for the developer, but the sysadmin… not so much.

worm

I’ve been able to get more reading done lately. One book I’ve read is Upping the Anti- by Tom Gillis, this was given to us by one of our Cisco vendors upon request. The book itself isn’t bad, it covers the basics of spam, virus and spyware happenings in the last few years. If you don’t know much about these topics and are looking for a primer this is a good book. As someone that’s worked with these items for awhile I didn’t find anything new here, it was just a rehash of what I already know. Many times there are tidbits in books like this that I find interesting or happen to fill gaps in knowledge that I have, but this book didn’t have any of those. If your are someone who’s been working in tech for awhile and had any remote dealings with these things this is not the book for you, it might be good to get someone you know who gets that blank look on their face when you mention updating a virus database or delve into why dealing with spam is such a pain in the ass. It does jump into ideas about solving the problems of the internet, though the ideas aren’t anything I imagine two tech savy people, dealing with this stuff on a regular basis, haven’t came up with on their own while chatting over a cup of coffee.

I’ve been able to get more reading done lately. One book I’ve read is Upping the Anti- by Tom Gillis, this was given to us by one of our Cisco vendors upon request. The book itself isn’t bad, it covers the basics of spam, virus and spyware happenings in the last few years. If you don’t know much about these topics and are looking for a primer this is a good book. As someone that’s worked with these items for awhile I didn’t find anything new here, it was just a rehash of what I already know. Many times there are tidbits in books like this that I find interesting or happen to fill gaps in knowledge that I have, but this book didn’t have any of those. If your are someone who’s been working in tech for awhile and had any remote dealings with these things this is not the book for you, it might be good to get someone you know who gets that blank look on their face when you mention updating a virus database or delve into why dealing with spam is such a pain in the ass. It does jump into ideas about solving the problems of the internet, though the ideas aren’t anything I imagine two tech savy people, dealing with this stuff on a regular basis, haven’t came up with on their own while chatting over a cup of coffee.

The other book I’ve been reading is First, Break All the Rules on recommendation from a fellow geek. At this point I’m about two thirds of the way through the book and it will probably be the most influential book I’ve read in the past few years. It’s not a new book, copyright 1999, but what I’m reading in there seems revolutionary. In truth it’s not really that revolutionary it’s just that the ideas make sense, but conventional wisdom in the management and human resources don’t follow this track. I like that this book is founded on a vast research study done by Gallup, which being a Nebraskan is a company I’ve always had respect for. The authors do a good job of presenting ideas and following those up with real world applications to illustrate the point. I would suggest this book to anyone in a corporate environment and even more strongly to managers and HR people.

It’s been a long time since I directly managed people, the advice that I recall being given at the time ran along the same path as what “everyone is doing” and to which this book points out has obvious pitfalls. In fact in many instances I was told I was doing things incorrectly, in this book these things are pointed to as attributes of what they called “great managers”. In no way shape or form would I refer to myself as a great manager. What I can say, is during that time period we had a small group of people that did a tremendous amount of work and had fun while we were doing it.

rotate those rails logs

As we come closer to going live with a high traffic Rails apps it’s time to hammer out log rotation. Thus far I’ve established a standard deployment location of /usr/local/sites/sitename for our apps. Following this advice I think I’ll use standard logrotate to move the logs around. I’ve read that log rotation can be done within Rails itself, but I’m rather afraid of Rails bloat at this point already and one less thing for the framework to worry about the better. Additionally as an admin if logging parameters aren’t meeting my needs having to go into the Rails app and redeploy it to make a change is just a bad idea. To that end I’ve come up with the following logrotate conf file which I’m dropping into /etc/logrotate.d/ as a file named rails:

As we come closer to going live with a high traffic Rails apps it’s time to hammer out log rotation. Thus far I’ve established a standard deployment location of /usr/local/sites/sitename for our apps. Following this advice I think I’ll use standard logrotate to move the logs around. I’ve read that log rotation can be done within Rails itself, but I’m rather afraid of Rails bloat at this point already and one less thing for the framework to worry about the better. Additionally as an admin if logging parameters aren’t meeting my needs having to go into the Rails app and redeploy it to make a change is just a bad idea. To that end I’ve come up with the following logrotate conf file which I’m dropping into /etc/logrotate.d/ as a file named rails:

# Rotate Rails application logs

/usr/local/sites/*/shared/log/*.log {

daily

missingok

compress

delaycompress

notifempty

copytruncate

dateext

}

I’m not deleting out old logs by default at this point but will add this once we’ve been up and rotating and backing up logs for a decent amount of time.

Flat on your face

Tonight’s ponderance is, how long will Facebook last before it goes flat? Don’t get me wrong, I really do like Facebook and I’m very impressed by all the clicky widgetry and modules people have written. I just don’t see how Facebook will manage to support itself with advertising once it becomes yesterdays cool thing (aka Second Life) and people start to find the next latest greatest thing. At that point the “cool factor” isn’t going to keep the CPM rate for largely useless ads on Facebook or Facebook apps at anything worth making money off of. In addition I imagine the page churn rate created by monster sites like this will probably drive ad revenues down for sites not based on churn, where people might actually look at an ad. Google shouldn’t reconfigure for slow loading pages, but for pages that load too often. I would say the best support for Facebook in the long term is the micropayment model as there are enough suckers out there willing to buy the $1 limited edition (only 1 million of them) white baby seal to gift to a friend. Actually my guess is that micropayments are probably the revenue model that will really hit from 2010-2015 and the smart people have already made some great traction in this market such as iTunes, a few MMORPGs, Wii and Xbox360. I’ll be interested to see what props up Facebook in 2010 as I imagine it won’t be ads and I think the four choices will be:

Tonight’s ponderance is, how long will Facebook last before it goes flat? Don’t get me wrong, I really do like Facebook and I’m very impressed by all the clicky widgetry and modules people have written. I just don’t see how Facebook will manage to support itself with advertising once it becomes yesterdays cool thing (aka Second Life) and people start to find the next latest greatest thing. At that point the “cool factor” isn’t going to keep the CPM rate for largely useless ads on Facebook or Facebook apps at anything worth making money off of. In addition I imagine the page churn rate created by monster sites like this will probably drive ad revenues down for sites not based on churn, where people might actually look at an ad. Google shouldn’t reconfigure for slow loading pages, but for pages that load too often. I would say the best support for Facebook in the long term is the micropayment model as there are enough suckers out there willing to buy the $1 limited edition (only 1 million of them) white baby seal to gift to a friend. Actually my guess is that micropayments are probably the revenue model that will really hit from 2010-2015 and the smart people have already made some great traction in this market such as iTunes, a few MMORPGs, Wii and Xbox360. I’ll be interested to see what props up Facebook in 2010 as I imagine it won’t be ads and I think the four choices will be:

- paid consumer mini sites (which will fail as people leave, see Second Life)

- some sort of premium subscription services (again user bailout will kill this)

- selling user data for marketing and research (now that would be a shit storm)

- micropayments (as mentioned earlier)

Of course there are already over 50+ “free gift” applications that come up under a Facebook application search so maybe that won’t be it’s saving grace.

Technorati

I decided I’d try to get authorized on Technorati, no real reason to decide to do this other than I was browsing and it fell into my “hey that would be neat” category. Their process looks extremely easy and involves three steps:

- Create Account

- Get Code to put in blog post

- Click a button to validate it

Unfortunately I don’t seem to be technically skilled enough to get it to work. I’ll have you know I’ve filled out web forms and posted entries successfully before. I find it annoying there is an option called “2. Choose Claim Method” in which there is only one claim method. If there is only one damn way to do this then don’t make it sound like you have engineered some behind the scenes marvel which does it in a variety of ways. So surprisingly I choose the “Post Claim” method and make my post here on my blog and make sure it’s visible. Back to Technorati and there is now a button to “Release the Spiders”, I realize since links to my blog currently appear in Technorati they are already spidering my site and this is another bullshit injected step. Being the daring person that I am, I decide to hit the button. Low and behold the message I get back says that it can’t find the code in my page, which of course I can find in my page and anyone with a web browser will see it in my page. My quick half-assed perusal through their FAQ gave me the impression that this method of authentication might not be working anymore, which I guess means their step 2 heading should be changed to “2. Be annoyed while we put your through this invalid claim method”. If it is working, my guess is that they have some sort of backend cache and a version of my page they are hitting rather than actually making a request to the page, when I was supposedly prompting them to go out and do so at the click of a button earlier. At this point I’ve surpassed my “hey that would be neat” threshold and I’m starting to get into FAQs and mentally reverse engineering their system. HALT, this is way more effort than I should be putting into this.

BTW – Five minutes later and I’ve installed a wordpress plugin to do all those damn “Digg This” type icons and links.

text conversion

dos2unix is something I’ve used for ages, converting windows files into something more pretty for my linux machine. Looking for it in my new install of ubuntu I discovered it’s within the tofrodos package, which I must note for later reference.

backwards

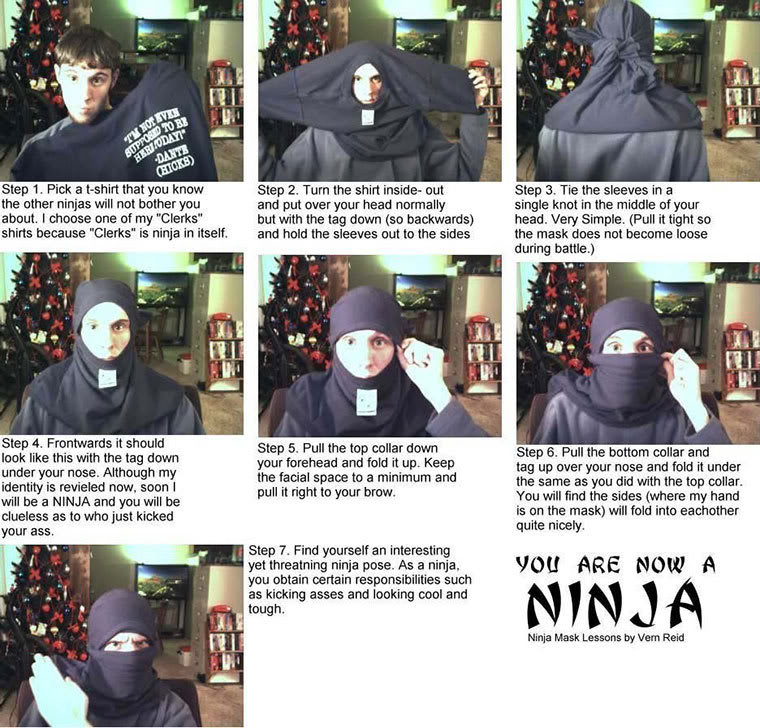

So after getting home today I realize that I had my tshirt on backwards all day. I spoke with multitudes of people today, of which nobody mentioned it. While I was wearing a button up shirt over my tshirt, it did not take more than a glance in the mirror at home to notice something was wrong. It really wasn’t that I was just tired and blurry when I got dressed this morning, the “real” reason it was on backwards was that I was building a server today and any time I build a server I must do this:

So after getting home today I realize that I had my tshirt on backwards all day. I spoke with multitudes of people today, of which nobody mentioned it. While I was wearing a button up shirt over my tshirt, it did not take more than a glance in the mirror at home to notice something was wrong. It really wasn’t that I was just tired and blurry when I got dressed this morning, the “real” reason it was on backwards was that I was building a server today and any time I build a server I must do this:

rhel5 via usb

So I’ve got my spiffy new HP DL360 G5 unboxed and pieced together and then I realize that they don’t come with CD/DVD drives in the low end package, something I should remember by now. That means the DVD of RHEL5.1 I’d burned yesterday to make sure I was ready, is pretty much useless. I know I can boot from a usb stick but I left the ones I’d made out at the facility, so now I have to dig up the instructions again and remember how to do it. So here they are (your locations may vary):

- Insert RHEL5.1 DVD into laptop, it becomes /media/cdrom

- Insert thumbdrive into laptop, it becomes /dev/sdb1 (/media/disk)

- cd /media/cdrom/images/

- dd if=diskboot.img of=/dev/sdb1

- remove thumbdrive

- insert thumbdrive in DL360 front usb port

- boot DL360 and install from my share

Pretty damn easy really. Oh yeah, remember to copy anything you have off the thumb drive first or it will be gone.